Since 2014, I envisioned this story taking place underwater, an extraordinary and captivating backdrop that had been relatively unexplored in the realm of science fiction. However, developing this story and bringing it to life posed significant challenges due to budget limitations and my lack of experience with these specific visual effects. It became apparent that shooting with real actors in an underwater environment was unfeasible, and working with practical effects was unfamiliar territory. Consequently, I had to rely primarily on myself and consider CGI as the optimal choice. Once this decision was settled, I could proceed with the project.

The initial versions of my script revolved around a group of divers engaged in a struggle for survival, immersed in the underwater environment wearing neoprene suits, with no submersibles involved. However, after conducting a series of rapid tests on dry land, it became evident that seamlessly integrating actors directly into the CGI underwater setting, without any intermediary layer, proved unsuccessful. Regardless of how convincingly the actors simulated being underwater or how skillfully I merged the footage in post-production, the physicality and movement required to authentically portray underwater motion could not be replicated elsewhere. One need only compare the disparity between the films Avatar: Way of Water and Aquaman to understand this. It quickly became apparent that I needed a solution that served as a boundary interface between the actor and the water—a means to depict a character in motion underwater without actual swimming. I sought to bridge the visual and kinetic gap, while also ensuring ease of integration of actor in post-production into the CGI environment. This led to the conceptualization and creation of The High-Pressure Underwater Suit, a machine-like apparatus designed to house the actor within.

The Suit

The design and modeling of the underwater suit became a critical priority for my story. I had to carefully consider the suit’s anticipated motion requirements and how it would precisely function with a human occupant underwater. The incorporation of layered accessories posed an additional challenge. Elements such as arm chains and leg belts had to smoothly glide over the surface of the suit and remain mobile without intersecting with the actor’s body or the suit itself. Certain components were even made fully dynamic, lending the suit a crucial sense of realism.

The final phase of asset creation involved texturing and shading, which posed another unique challenge. It was crucial to develop a visually compelling appearance, particularly within the context of a low-visibility or underwater environment, to ensure that the textures stood out prominently. As a result, I opted for contrasting textures that emphasized the edges, aiding the audience in discerning the shape even in dimly lit and rapidly moving shots. Furthermore, I had to strategically incorporate additional simulated reflections onto the suit from invisible planes surrounding it. This was necessary because the reflections obtained from real-world conditions alone were insufficient to achieve the desired level of visual impact and luminosity.

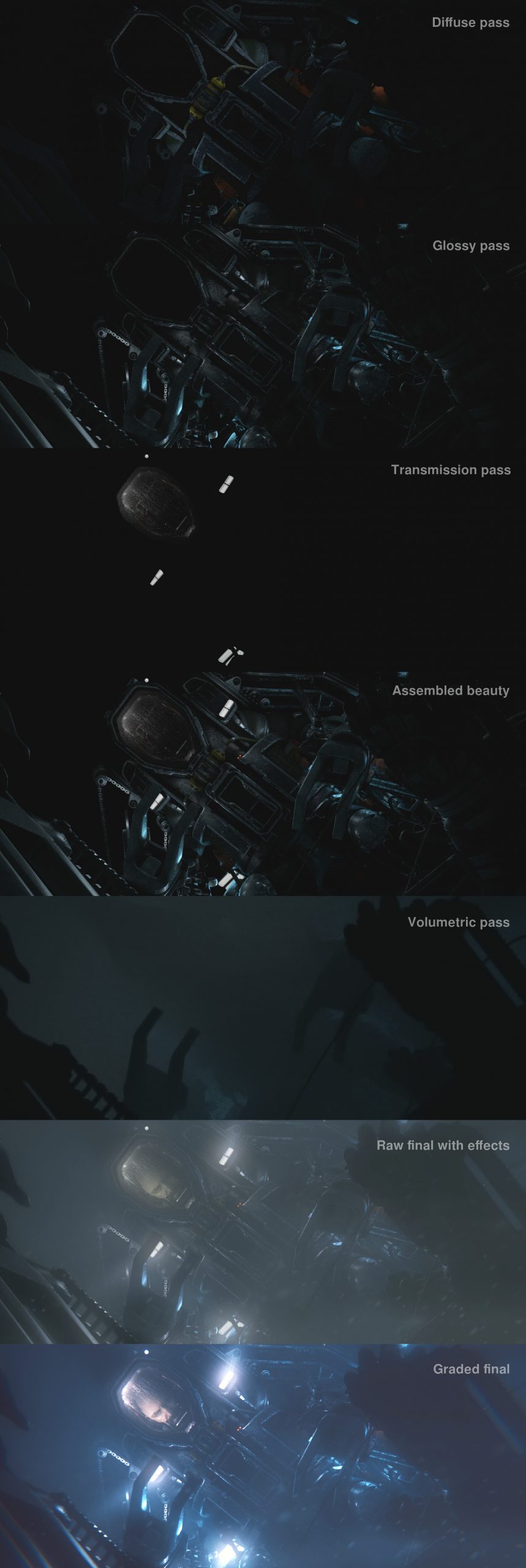

Underwater Environment

Once the hero asset was prepared, attention shifted towards the surrounding world. One of the most significant hurdles I faced involved the recreation of a convincingly realistic underwater environment using CGI. It was imperative to me that the visuals possessed a specific quality that was essential for capturing the essence of deep underwater shots. I aimed to evoke a somber, claustrophobic atmosphere, which, in retrospect, I may have pushed to an extreme in certain instances. Despite the unpredictable nature of the process in developing the final look of my shots, it was an exhilarating experience to witness my vision gradually taking shape before my eyes. The breakdown provided below illustrates the meticulous step-by-step process involved in creating each underwater shot in my film, from layout to final shot.

From a technological standpoint, the computational load associated with the underwater volume proved to be exceedingly demanding. Unlike professional productions that have access to hundreds of computers, I had only a single workstation at my disposal. After conducting swift calculations, it became evident that I needed to devise a strategy to effectively manage the processing times required for every pixel of my film if I intended to eventually deliver the complete short film. As a result, I had to for example reduce the final resolution to half HD from the original full HD, and I opted for a cropped 2.35:1 wide format. This decision not only resulted in significant time savings, up to 25% compared to larger 16:9 aspect ratio images, but also ensured more efficient processing of the visuals.

Upon observing real-life footage, I came to recognize the critical role that small details play in establishing the overall believability of an underwater environment. It became evident that elements such as dynamic particles, drifting weeds, oxygen bubbles, and drifting dust played a captivating dance with one another, interacting harmoniously within the confines of a unified dynamic setting. Understanding the limitations of my resources and the need to maintain production momentum, I acknowledged that attaining such a high degree of realism would be very challenging. Consequently, I decided to emphasise certain secondary aspects of underwater imagery to enhance the illusion, such as chromatic dispersion or light absorption.

Actor Integration

With the main CGI elements set up, it was now the appropriate moment to figure out how to put an actual actor into the suit. I found the idea of incorporating a live actor in an underwater mechanized suit highly intriguing. From the start, my preference was for someone who exudes an ordinary demeanor rather than the stereotypical hero. The protagonist would bring a sense of realism to the entirely virtual surroundings, creating an impression of an everyday world. This approach aimed to make the experience more genuine and relatable.

The integration process itself comprised two distinct phases. The initial phase involved accurately positioning the actor’s headshots. This necessitated the actor assuming a completely motionless position from the neck down while performing a scene. Subsequently, the key-out footage was projected onto a plane, constantly facing the camera, which was securely placed within the interior space of the 3D suit. Once the head placement was perfected, the second phase involved incorporating the actor within the virtual suit itself. To achieve this, I meticulously removed all digital layers of the CGI image, including the glass, in order to access the interior of the suit. This was accomplished by utilizing an additional separate layer that precisely replicated the glass and then subtracting that layer from the main image. The purpose of this approach was to dissociate the actor’s footage from the main image, enabling easy switching or adjustment of the footage without requiring the entire main image to be reprocessed each time. It was all about saving the computational resources.

The process of decoupling the footage from the main image was further facilitated by the cubic design of the cockpit glass, which featured four divided sides – or “screens.” This unique configuration allowed me the freedom to manipulate each shot extensively, even enabling seamless transitions between different takes within a single shot.

However, a recurring challenge emerged when the footage of the actor’s head failed to seamlessly align with the 3D shot. This discrepancy arose due to differences in perspective or incomplete framing, often necessitating the removal of unwanted elements. To address this issue, I employed a re-animated 3D model of the actor’s head, precisely tracked to their performance. This allowed me to manipulate the footage to fit the desired specifications. Notably, the focal point primarily centered around the triangle defined by the eyes and mouth, with less emphasis on the remaining areas. For instances where the character was distant, I opted for a complete CGI head instead to expedite the process. Reflecting back, I now wonder how the outcome would have differed had I chosen to create the entire short using CGI. These ingenious shortcuts played a crucial role in bridging the pieces I ardently sought to connect.

Dynamic Simulations

A crucial component of underwater imagery is the dynamic layer. This phase was undoubtedly the most daunting for me. I found myself relying on every technique I had learned from the dynamic simulation field in order to attain results that were deemed acceptable, at the very least. However, most of the time, I found myself tinkering with parameters without a thorough understanding of their underlying principles. Each time something usable emerged from my experimentation, it felt like a remarkable stroke of luck.

Small Bump on the Road

However, that luck was completely absent when this project encountered its darkest hour. Every project I suppose has its own. It is during this critical juncture that the fate of the project is determined, whether it will ultimately be completed or abandoned.

To get straight to the point – to deliver and render out an entire 6 minutes of animation I heavily depended on cutting-edge GPU rendering technology, which was relatively new and carried some risks back in 2015. It played a crucial role in processing every pixel of my short film. The GPU was for me and my budget an indispensable component, as without it, completing this project would have been simply impossible.

Little did I know there was a hidden catch. An unbreakable hardware limitation: a maximum threshold of approximately 120 image textures allowed per individual scene. As the shots progressed and the assets reached their final stages, the number of required image files skyrocketed to 250-300 textures, surpassing the imposed restriction.

When I found out and hit the wall, the production momentum had come to a screeching halt, leaving me in a state of utter uncertainty. I can vividly recall that evening, sitting in front of a completely pink shot (an indication that an image file was missing) and feeling completely exhausted. Silence enveloped me as I grappled with the situation. Everything seemed to be collapsing around me, and the thought of giving up crossed my mind. In that moment of pure despair, I felt myself spiraling downward mentally.

In a nutshell, the solution was right before my eyes the entire time. Although it may seem obvious now, it was far from apparent back then. However, in a moment of sudden inspiration, I had an epiphany. I realized that I could condense the necessary information from the initial set of 300 textures into just a few newly generated massive ones, effectively reducing the texture count below 120. The entire process was automated through customised scripts (including UV corrections on all assets, etc..). In a span of two days, I successfully transformed the 300 regular-sized textures into 50 colossal ones, without compromising any essential elements. This breakthrough instantaneously revitalized both the project and my confidence.

From that point forward, I harbored an unwavering determination to see this film through to its completion, regardless of any obstacles that may arise. The newfound energy propelled me back into action, and I diligently resumed the task of finalizing the remaining pieces.

Thank You!

After two years of arduous production, the short film Saurora was successfully released online in 2016. It garnered recognition from esteemed platforms such as CGBros, Blendernation, Alien Hive, 7th Matrix, SideFX or Film Shortage, whose administrators I would like to express my gratitude to for their assistance in promoting the film.

Also a heartfelt thank you goes out to my family, friends and all those who contributed to this project.

And finally, I’d like to express my gratitude to you – the people who enjoy what I do and give me the support that keeps me going!

You’re the best!

Leave a Reply